Scientists create speech using brain signals

At a Glance

- Scientists used brain signals recorded from patients with epilepsy to program a computer to mimic natural speech.

- This advance could one day help certain patients without the ability to speak to communicate.

Losing the ability to speak can have devastating effects for people whose facial, tongue, and larynx muscles have been paralyzed due to stroke or other neurological conditions.

Technology has helped these patients to communicate through devices that translate head or eye movements into speech. Because these systems involve the selection of individual letters or whole words to build sentences, communication can be slow. The technology can only generate up to 10 words per minute, while people speak at roughly 150 words per minute.

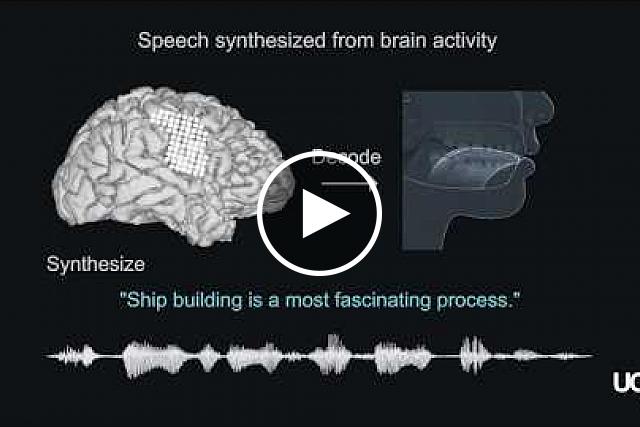

Instead of recreating sounds based on individual letters or words, a team led by Dr. Edward Chang at the University of California, San Francisco, looked for ways to create computer-generated speech. The team studied five patients with epilepsy who’d had electrodes placed in their brains to detect seizures prior to surgery. They focused on the brain activity involved in controlling the more than 100 muscles needed to produce speech. The research was supported in part by an NIH Director's New Innovator Award, the NIH BRAIN Initiative, and National Institute of Neurological Disorders and Stroke (NINDS). Results were published on April 24, 2019, in Nature.

The researchers first recorded signals from the brain area that produces language while participants read hundreds of sentences out loud. Using this data, the team then mapped out the vocal movements the participants used to make the different sounds, including how they moved their lips, tongue, jaw, and vocal cords. Next, the researchers programmed this information into a computer with machine-learning algorithms to decode the brain activity data and produce synthetic speech.

Volunteers were then asked to listen to 325 synthesized single words or 101 synthesized sentences and transcribe what they heard. When choosing from a list of 25 possible words, they accurately identified more than half of the synthesized words and determined the sentences being spoken by the computer without any errors 43% of the time. The accuracy varied depending on the number of syllables in the words and possible words in the sentence.

The decoded vocal movement maps were similar across the volunteers, suggesting that this step may be generalizable across patients. That may make it easier to apply these findings to multiple individuals.

“For the first time, this study demonstrates that we can generate entire spoken sentences based on an individual’s brain activity,” Chang says. “This is an exhilarating proof of principle that with technology that is already within reach, we should be able to build a device that is clinically viable in patients with speech loss.”

The researchers plan to design a clinical trial involving patients who are paralyzed and have speech impairments to determine whether this approach could be used to produce synthetic speech for them.

Related Links

- Engineering Functional Vocal Cord Tissue

- Brain Mapping of Language Impairments

- How the Brain Sorts Out Speech Sounds

- Understanding How We Speak

- Words and Gestures Are Translated by Same Brain Regions

- What Is Voice? What Is Speech? What Is Language?

- Assistive Devices for People with Hearing, Voice, Speech, or Language Disorders

References: Speech synthesis from neural decoding of spoken sentences. Anumanchipalli GK, Chartier J, Chang EF. Nature. 2019 Apr;568(7753):493-498. doi: 10.1038/s41586-019-1119-1. Epub 2019 Apr 24. PMID: 31019317.

Funding: NIH’s National Institute of Neurological Disorders and Stroke (NINDS), NIH BRAIN Initiative, and NIH Director’s New Innovator Award; New York Stem Cell Foundation; William K. Bowes Foundation; Howard Hughes Medical Institute; New York Stem Cell Foundation; and Shurl and Kay Curci Foundation.

.png)

No hay comentarios:

Publicar un comentario