Planning a Genomic Characterization Study? Tips for Collecting and Processing Biospecimens

January 16, 2018, by Peggy I. Wang, Ph.D.

Many researchers currently face taking on a genomic characterization project, a task filled with the potential for novel biological discovery, yet also expensive risks and mistakes. Researchers may wonder who should handle my samples and what protocols should be used? How do I know if my samples are high quality? While sequencing technology is getting cheaper and better, a lot of planning and crucial decisions still need to be made, even just within collecting and processing biospecimens.Learn From the Old and Experienced

The Cancer Genome Atlas (TCGA) officially began in 2006. In high-throughput sequencing technology years, TCGA is, well, very old. What began as three pilot projects grew to 33 cancers when the technology was rapidly changing and various sequencing platforms were competing to dominate the field. This presented many challenges in identifying and selecting appropriate methods for collecting tissue samples, extracting molecular analytes, and molecular characterization. TCGA had to consider the quality, cost, timing, and interoperability of all these moving parts with the overarching goal to produce consistent data that would remain relevant and valuable for the scientific community to mine for years.

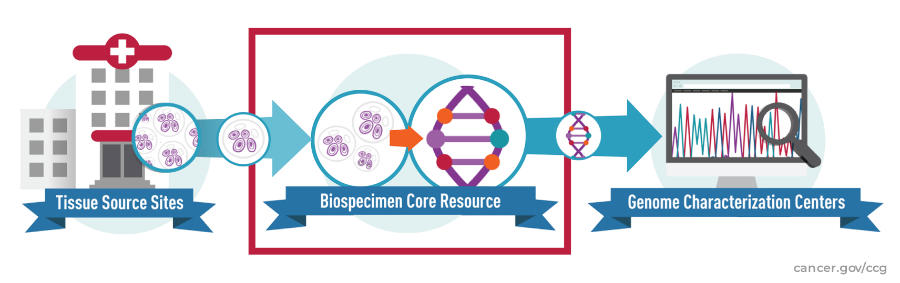

Through years of trial and error, TCGA developed its so-called, Genome Characterization Pipeline, an assembly of facilities, institutes, and researchers who work together to process patient samples into data for the scientific community to analyze. The Center for Cancer Genomics (CCG) manages the pipeline for use by many other characterization projects currently underway throughout NCI. Dr. Roy Tarnuzzer, the Biospecimen Core Resource (BCR) Program Manager for TCGA, discusses the biospecimen processing aspect of the pipeline and provides tips for planning a study.

Do You (and Your Samples) Have What It Takes?

“A study can only be as good as the specimens and their associated clinical annotation,” says Dr. Tarnuzzer. “Before diving in, determine what biospecimen and clinical prerequisites are needed for your project’s objectives.” For example, if you are studying tumor response to chemotherapy, do you have the timing and dosage of each treatment? In a project about normal adjacent tissue, do you have the distance from the tumor to the normal sample? See Table 1 for a sample planning-phase checklist. Generally, biospecimens should be well-annotated in:

- Collection and handling process – Detailed documentation of how samples are collected, processed, and stored tends to lead to higher quality and more consistent biospecimens. Retrospective and prospective studies also have different considerations (Table 2).

- Clinical features – Work with subject matter experts to identify and standardize the clinical data needed for a meaningful analysis. At its inception, TCGA’s focus was building a map of the genomic changes in cancer and thus, only a few basic clinical features were collected. In contrast, the current wave of CCG projects, which aim to connect clinical outcomes with the characterization, collect over 125 features, all standardized and registered in the Cancer Data Standards Registry and Repository.

Let People Do What They’re Good At

In practice, CCG breaks down the BCR’s function of collecting samples and producing molecular analytes into three discrete pieces:

- Biospecimen collection and processing – Assembling samples, checking sample quality, extracting DNA, RNA, and other necessary analytes, and sending analytes on for characterization.

- Clinical data collection and curation – Identifying and registering standardized clinical data points, working with collection sites to ensure clinical data is properly collected and in the right format.

- Biospecimen storage – Providing temporary storage of samples being processed and long-term, indefinite storage of residual tissue and analytes.

“To maximize expertise, CCG utilizes different entities to cover each of the functions as their primary strength,” explains Dr. Tarnuzzer. Extracting DNA and RNA from biospecimens is a distinct task from reliable long-term storage of samples, and both are quite unlike implementing a digital database of complex clinical terms.

Create Consistency With a Checklist

Researchers following the same protocol vary in what they do, as most who have worked in a biological wet lab will attest to. “Back in grad school, some folks even hid personal bottles of reagents—their own secret sauce,” recalls Dr. Tarnuzzer. “This culture leads to reproducibility problems, hinders research progress, and would be completely detrimental to a standardized genomic characterization pipeline such as ours.“

TCGA’s remedy to this has been to keep checklists, standardize data and processes, and maintain copious and thorough documentation, available upon request, of every step and procedure—well-known business best practices. To help maintain consistency and build upon past experience, CCG utilizes three types of checklists:

- Standard Operating Procedures (SOPs) – Step-by-step instructions for everything from receiving different types of packages to when to print and apply a barcode label to a sample. “Unless you define a pipeline, you end up with a group of unreproducible processes,” cautions Dr. Tarnuzzer. “If a biospecimen processing facility needs to be added or swapped later, they can easily replicate the function by following the SOPs.”

- Quality Management System (QMS) – A system for documenting all of the processes and procedures of the pipeline. An ISO-like QMS allows CCG to continuously monitor the status and quality of biospecimens and other aspects of the entire pipeline, identify deviations and address them on an ongoing basis. “When multiple samples suddenly failed characterization in the past, the QMS let us quickly trace the problem to a faulty batch of reagents,” recalls Dr. Tarnuzzer. Table 3 details some of the major quality checks performed by the BCR.

- Clinical data inventory – A checklist of required clinical data for each project. This checklist enables CCG to understand exactly what clinical data is available at a site and if the site meets the requirements of the study before starting a collaboration.

Be Prepared for the Plan to Change

“In short, make a plan, then be prepared for that plan to change,” laughs Dr. Tarnuzzer. “Remember that each piece of the overall pipeline is interdependent and there will be fluctuations.”

Planning a large-scale genomics study in some ways is akin to preparing for a disaster. Along with anticipating problems, communication is key in handling the fluctuations and deviations that are bound to occur. Even with over a decade’s experience, CCG is still continuously learning and tweaking the pipeline to adjust to such changes.

While there is no single, optimal pipeline for any project, let alone all projects, CCG has learned many lessons in biospecimen processing that are worth considering before diving into your own genomic characterization study.

References

1. Zenklusen, Jean C., et al. Collaborative Genomics Projects: A Comprehensive Guide. Academic Press, 2016.

.png)

No hay comentarios:

Publicar un comentario