HUMAN BRAIN PROJECT

The little android with a sense of touch

A typical feature of mammals lies in their ability to experience sensations, something that robots are beginning to imitate. With the help of artificial skins and algorithms, Czech researchers have managed to make a small humanoid robot aware of human contact and even notice if someone is invading his 'living' space.

Matej Hoffmann with iCub and Nao humanoid robots. / Duilio Farina, Italian Institute of Technology

Measuring little more than one meter in height, the iCub robot has the proportions of a four-year-old child. Instead of joints, it has 53 electric motors, two cameras that function as eyes, two microphones for hearing and something similar to the sense of touch, thanks to 4,000 sensors that are sensitive to pressure.

"The robot can experience the world in a similar way to that of a child and has the potential to develop a similar type of cognition," Matej Hoffmann, the main researcher of the Czech project RobotBodySchema, has explained to Sinc.

His team is one of many who are using this humanoid open source robot, designed by the Italian Institute of Technology. In their case, they chose it because its body has proportions similar to a baby and because of the unique electronic skin.

Disciplines as disparate as philosophy, psychology, linguistics, neuroscience, artificial intelligence and robotics have spent decades studying cognition, the faculty that processes information and includes skills such as learning, reasoning, attention and feelings.

“The robot can experience the world in a similar way to that of a child”, says Hoffmann

While psychology and neuroscience favour studies with people, artificial intelligence is more inclined towards computer models, interpreting that faculty as mere information processing.

"Cognition is inseparable from the physical body and its sensory and motor systems, so computational models are not enough," says Hoffmann, who heads the Humanoid and Cognitive Robotics group at the Czech Technical University in Prague.

The advantage of robotics and its androids when studying these processes is that they contemplate contact with the environment. In addition, they can simulate changes or injuries impossible to practice on human models.

An artificial skin to feel

The best example of contact is when one person touches another. Can a robot notice that sensation and identify the part of the body in which it has happened? This is precisely what Hoffmann and his team have achieved.

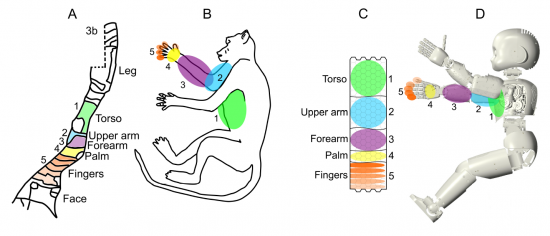

Thanks to the thousands of sensors that make up the artificial skin of the humanoid and the development of a new algorithm, the engineer and PhD student Zdenek Straka has succeeded in making the robot develop and learn a map of the surface of its skin -called homunculus- similar to that generated by the human brain and that of other primates.

"A touching of the robot’s body is transmitted to a particular region of this map. It will then be up to the android to figure out how to interpret or use that information," says Hoffmann. The algorithm also adapts to bodily changes. If a part of the skin stops feeling, the robot's 'brain' reassigns that area to other parts of the body.

The have succeeded in making the robot develop and learn a map of the surface of its skin similar to that generated by the human brain

"Touch is a somewhat underestimated sense, but it is actually extremely important," emphasizes Hoffmann. Thanks to touch we are aware of the entire surface of the body, which allows us to perceive different types of contact, from a caress to a collision. According to experts, equipping robots with this sense will improve their future contact with humans.

Robots also need their space

To go back to people, there are people who feel uncomfortable with excessive contact or an excessive closeness with their interlocutor, because their living space – their peripersonal space – is being invaded. Hoffmann and his team are trying to get the androids to appreciate this space.

"It is very important for the safe interaction between robots and humans, especially in the context of those collaborators who leave the security areas and share living space with humans," the expert points out.

As a first step, Hoffmann and Straka have developed a computer model based on a neural network architecture within a two-dimensional scenario, where the objects approach a surface that simulates the skin.

At present, the researcher, together with his students Zdenek Straka and Petr Švarný, are applying the model to humanoids. "Robots must be able to anticipate contacts when something penetrates the limit of their safety area," states Hoffmann.

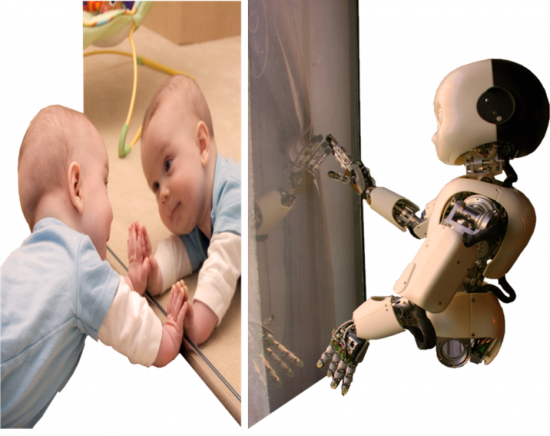

The sensations of a baby

Although mainly working with androids, researchers have also studied sensations experienced by humans, more specifically, babies of between three and twenty-one months of age. With the help of psychologists, they have placed a device that vibrated on different parts of the body and face of the baby and analyzed how they reacted to these stimuli.

Researchers have also studied sensations experienced by humans, more specifically, babies of between three and twenty-one months of age

The main conclusion is that infants are not born with a map or a model of their own bodies. For this reason, their first year of life is decisive for them to be able to explore their bodies and learn the patterns that they will then repeat, "such as when they have to scratch themselves in a specific area," the engineer points out.

This and the previous investigations are part of the RobotBodySchema project, whose final objective is to study the mechanisms that the brain uses to represent the human body.

Analyzing the mind from the prism of robotics entails an effect as fascinating as it is little-explored: the humanization of machines. "We apply algorithms inspired by the brain to make robots more autonomous and safe," concludes Hoffmann.

RobotBodySchema is a Partnering project of the Human Brain Project, one of the Emblematic Research Initiatives of Future and Emerging Technologies (FET Flagships) of Horizon 2020 - the framework program for the financing of the research of the European Union.

The Sinc agency is participating in the European SCOPE project, coordinated by FECYT and financed by the European Union through Horizon 2020. The objectives of SCOPE are to communicate visionary results of research projects associated with the GrapheneFlagship and the Human Brain Project, as well as to promote and strengthen relationships within the scientific community of the Emblematic Research Initiatives of Future and Emerging Technologies (FET Flagships) in the EU.

No hay comentarios:

Publicar un comentario