Analytical Science in Precision Medicine

Thought LeadersProfessor Jeremy K. NicholsonHead of the Department of Surgery and Cancerand Director of the MRC-NIHR National

Thought LeadersProfessor Jeremy K. NicholsonHead of the Department of Surgery and Cancerand Director of the MRC-NIHR NationalPhenome Centre Faculty of Medicine

An interview with Professor Jeremy K. Nicholson, Head of the Department of Surgery and Cancer and Director of the MRC-NIHR National Phenome Centre Faculty of Medicine, conducted by April Cashin-Garbutt, MA (Cantab)

It was recently announced that you will be presenting the upcoming Wallace H. Coulter Lecture at Pittcon 2018. What will be the main focus of your lecture?

Pittcon is an analytical conference, so naturally my talk will be about analytical chemistry and how analytical chemistry will become increasingly important in delivering healthcare solutions, not only for rich people, but also, hopefully, for poor people across the world.

The challenges that we face in 21st century medicine will be illustrated, particularly the diversity in terms of the emergent diseases that are occurring and the genetic and environmental drivers that change disease patterns and prevalence in whole populations. For instance, the obesity epidemic is a driver for a whole range of things including cancer, diabetes and Alzheimer's disease.

The important factor here is that we generally think about solving the problems we have currently and what technology or chemistry we need now. The world is changing very quickly, so we also need to address problems that will be coming over the horizon, or are already on the horizon, that we are going to face seriously in the next 20, 30, 40 years.

Those are problems such as anti-microbial resistance; the convolution of global warming with healthcare, which changes the way that parasites and infectious diseases operate on a global scale and the fact that our populations in western countries are living for much longer, which gives us a whole range of diseases that were not so common in the population before.

The idea is that we have to study chemistry and develop technology that is future-proofed, because, in the future, we won't have time to generate the technology. We have got a lot of work to do very quickly. It is a big challenge and everybody needs to pull together.

Credit: Apple's Eyes Studio/Shutterstock.com

Credit: Apple's Eyes Studio/Shutterstock.comWhat are the main challenges of 21st century healthcare?

There are what we call emergent threats, which are partly due to there being a lot more people living on the planet than ever before and therefore more things that can go wrong with more people.

Emergent threats include things such as diseases that may have been previously isolated in the tropics, but now are not because of modern transportation. There are things like Ebola and many others. We are seeing diseases in the West that we haven't faced before, but are going to see more and more.

There are also diseases that have emerged due to changes in the biology of the organisms that live on or within us. Over the last 30 or 40 years, about 30 completely new emergent diseases have popped up and we should expect to see more of those.

There is also the incredible interaction of the human body with the microbes that live inside us. We are supraorganisms with symbiotic microbes and we have changed our own chemistry, physiology and also our disease risk. We have realized that we are not alone. We are not just looking at our own genetics, but at our own biology.

Credit: Anatomy Insider/Shutterstock.com

Credit: Anatomy Insider/Shutterstock.comWe have to look at ourselves as a complex ecosystem that can get a disease as a systemic disease, a disease that affects the whole ecology of the body, which seems to be increasingly the case for things like gut cancers, liver cancers and possibly a whole range of what we would normally call immunologically-related diseases.

There's a lot more than we thought that has to be sorted out analytically. We have to be able to measure it all to understand it, which is where analytical chemistry comes in, in all its various forms.

We are trying to apply analytical technologies to define human populations, human variability and how that maps onto ethnicity, diet, the environment and how all those things combine to create an individual’s disease. We also want to understand it at the population level.

The other thing I will emphasize is how personalized healthcare and public healthcare are just flip sides of the same coin. Populations are made of individuals. We want to improve the therapies and the diagnostics for individuals, but we also want to prevent disease in those individuals, which means also understanding the chemistry and biochemistry of populations.

How can analytical science in precision medicine help overcome some of these challenges?

When you think about all aspects of human biology that we measure, they are all based on analytical chemistry. Even genetics and genomics are carried out on a DNA analyzer, which is an analytical device that has sensitivity, reproducibility, reliability and all the other things that we normally think of as analytical chemists.

Analytical chemistry underpins every part of our understanding of biological function. Proteomics is based on a variety of different technologies for measuring proteins for example. The different technologies put different bricks in the wall of our understanding of total biology.

What we understand much less, is how those different blocks and units work together. We understand it quite well from the point-of-view of individual cells; how a cell works in terms of its DNA, RNA, protein production, metabolism, transport and so on. A huge amount is known about how the basic engine works.

When you start to put lots of different sorts of cells together, however, we understand much less. We know much less about how cells communicate locally and at long-range and how chemical signaling enables cells to talk to each other. When we start thinking about humans as supra-organisms, we also need to understand how our cells and bacterial cells talk to each other.

One of the great challenges is not only getting the analytical chemistry right for measuring the individual parts, but having the appropriate informatics to link the analytical technologies in ways that give information that is what we would call “clinically actionable”.

It is very easy to measure something in a patient, by taking blood and measuring a chemical in it, for example. However, to understand what that measurement really means, it has to be placed in a framework of knowledge that allows a doctor to decide what to do next based on that piece of information. In almost all analytical technologies and despite all the fancy new things, whichever “omics” you are interested in, the ability to inform a doctor to the point that they can do something is lacking.

One of the greatest challenges we face is not just using the new technologies, which have got to be reliable, reproducible, rugged and all the other things you need when you're making decisions about humans. It is also about visualizing data in ways that are good for doctors, biologists and epidemiologists to understand, so that they can help provide advice about healthcare policy in the future.

Aside from the challenges faced, one of the points that I would like to make in my lecture, is the deep thinking that needs to surround any technological development for it to be useful in the real world. That's ultimately where all our fates lie.

Will you be outlining any specific examples or case studies in your talk at Pittcon?

I will describe the challenges and big issues in the first five or ten minutes and then we'll start to look at what creates complexity. I will give the supra-organism examples and since I am mainly a metabolic scientist, I will show how, particularly from a metabolic point of view, microorganisms influence biochemical phenotypes in humans and how those relate to things like obesity risk, cancer risk and so on.

I will deliver it in a way that provides a more general understanding of the complexity and how that affects us as human beings. Then I will talk about more specific examples about how to ensure a technology makes a difference to the patient. How you study the human biology, how biology is complex and what you need to study about it analytically is the first part and then I will give some examples where it is on a clinical timescale.

If you are thinking about understanding population health and population biology, which is what epidemiologists do, it does not really matter how long it takes to get the answers, so long as they get the right answer, because not one individual patient is dependent on their thinking. What you are trying to do, is understand where diseases come from so that in the future, you can make a better world by actioning the knowledge.

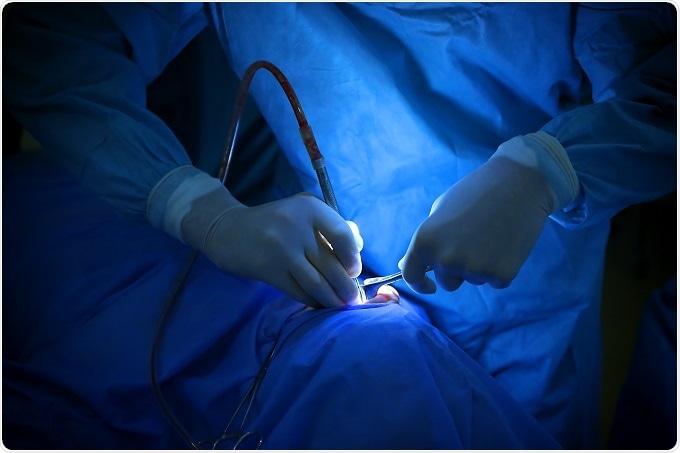

When somebody is ill, there is a timescale. There are different timescales, with each presenting their own different analytical challenges. If somebody has gut cancer, for example, they are going to need hospitalizing and to have some diagnostics performed. Physicians will decide exactly what sort of cancer it is, if the physicians understand it, and then there will either be surgery or some sort of chemotherapy. There will be a physical or chemical intervention of some sort.

Therefore, any analytical chemistry performed has got to be done within the timescale of the decision-making that the doctors make. In this example, it would have to be done within a day or two and the answer has to be interpretable by a doctor in that timescale.

For instance, there is no point doing a huge genomic screen on somebody; it would take three months to analyze the data, the doctors would have moved on and the patient may well be dead. All analytical technology has got to fit the constraints and the timescales in which the physicians operate.

Credit: Africa Studio/Shutterstock.com

Credit: Africa Studio/Shutterstock.comThe most extreme example is surgery, which involves real-time decision-making. The surgeon will cut a bit, have a look, cut another bit and then, based on a whole range of different background information, knowledge and potentially spectroscopic information, they will make decisions about whether to cut or not to cut.

The iKnife technology developed by Professor Zoltán Takáts, which I had a part in at Imperial, connects a surgical diathermy knife to a mass spectrometer, which means the chemistry of the tissue can be read 3 times per second and based on an annotated knowledge data set, we can know exactly what the surgeon is cutting through. That is an example of an analytical technology that gives a surgeon unprecedented molecular knowledge in real time and it is a real game-changer.

Then there are other things, one of which, is on an intermediate timescale. If somebody is in critical care, for example, then by definition, they are seriously ill. They change very quickly over minutes to hours, so any analytical technology that is informing on them has to be very, very fast and the output has to be very quickly readable and interpretable by a doctor.

I think this is also very interesting from an analytical chemistry point-of-view. It's not just about what you are measuring; the whole protocol you construct has to be fit for purpose within the timescale that is relevant to the medical decision-making, which is a huge challenge. A lot of the people, when they develop these technologies, do not necessarily think about it from the point-of-view of the doctor who is on the ward.

A few years ago, I used to give talks where I put forward an idea that I called the "Minute-Man challenge." The Minute Men were the Americans who wanted things ready within a minute, to fight off the British. There are Minute-Man missiles that the Americans made, which are ready to launch at any enemy within one minute of the go-code being given. The Minute-Man challenge for analytical chemistry is to get from a sample being provided to complete diagnosis within a minute or less.

Nothing exists that does that yet, but we are working on solutions. I thought NMR spectroscopy would be the most likely to provide that because it is a technology where you don't have to do anything to the sample; it is all based on radiophysics. Obviously, making samples and getting them ready for a machine takes time, so whatever the winner of the Minute-Man challenge is, it is going to be something that basically operates on the raw fluid or something incredibly close to that. It will almost certainly have to be directly injected into some sort of measuring device, so of course, in mass spectrometry, we start to think about direct injection mass spectrometry. There is a whole range of other atmospheric pressure methods such as desorption electrospray ionization and so on.

There is the technology invented by Professor Zoltán Takáts, which can also give you two- and three-dimensional tissue information. I am going to discuss the challenges in time, the challenges in actionability, as well as the challenge in the idea of providing complete diagnosis very rapidly, as something that is effectively a one-shot diagnosis.

Other things will come along; whether there will be one methodology that answers all possible biological or medical questions is, to me, extremely doubtful, but I think technologies are available now, probably mainly revolving around mass spectrometry, that will allow that sort of diagnostic fire power.

Also, if you are using a zero reagent and direct injection methodology, the cost comes down. The time and the cost go together, so, ideally, if you want to study large populations of people, you need to be able to test millions of samples at a relatively low cost. You want something that can measure 10,000 things at once in less than a minute and cost you a dollar. That would be the dream.

I think we might not be that far way from being able to do that, so what I'm will try to do in my talk is to juxtapose the big challenge ideas against the big analytical challenges and hopefully paint a picture that isn't entirely black.

Which analytical techniques have been most important to your work to date?

I am a spectroscopist by training and I am well-known for NMR spectroscopy, but over the last 15 or 20 years, I've been doing mass spectrometry just as much as NMR. We have 13 high-field NMR machines in my department and about 60 mass spectrometers, all analyzing metabolism, which is quite a collection. I never thought there would be a day when I had more mass spectrometers than NMR spectrometers, but there you go!

Historically, I also worked on X-ray spectroscopy and analytical electron microscopy and atomic spectroscopy, but it was NMR that really made my life come alive from the point-of-view of biology. When I started doing this as a post-doc, I realized that NMR could make the sort of measurements we are talking about - the Minute-Man type measurement - on a load of different things and extremely quickly, so I have been toying with that for over 30 years.

Certainly, when I first started working with NMR body fluids in the early 1980s, people thought I was completely mad. High-field NMR machines were for putting pure chemicals in, to get certain structures out and the idea of putting horse urine in an NMR machine would drive some of my colleagues completely crazy.

Aside from being an abnormal use of a highly advanced spectroscopic technology, people thought it would be too complex to work out what all the thousands of components are. In fact, it was complex and we still haven't worked out what all of them are after 30 years and a thousand man years of working in my research group. However, we have sorted out thousands of signals and what they mean biologically.

NMR is the most important technique for me personally because it made me the scientist that I am, but the other thing I love about NMR is the fact that it does not destroy anything. It is non-invasive and non-destructive. You can study living cells and look at molecular interactions, as well as just concentration. The binding of small molecules to large molecules can be studied, and those have diagnostic value as well, which I think, is underappreciated in the metabolic community.

Most people think mass spec must be better than NMR because it is more sensitive, which it is for most things, but NMR provides a whole set of information that mass spec could never obtain. The two together is the ideal combination if you want to study the structure, dynamics and diversity of biomolecules.

How do you think advances in technology will impact the field?

Technology advances in all ways, all the time and the advance in analytical technology is accelerating. There are things that we can do now in mass spec and NMR, for instance, that we would have thought impossible five or ten years ago. The drivers in analytical chemistry are always the improvements in sensitivity, specificity, reliability, accuracy, precision, reproducibility; but for clinical applications, and also for very large-scale applications that you need to study populations, it is robustness, reliability and reproducibility that are the most important things. The ability to harmonize data sets is also very important; irrespective of where you are working in the world, others should be able to access and interpret your data.

It's not just the advances and individual pieces of instrumentation that are important; it's how you use them to create harmonizable pictures of biology, that also has an informatic court. It is not just the analytical chemistry or technology itself, but how you use the data.

Let's just compare NMR and mass spec. One of the things about NMR is that it uses radiophysics and is based on the ability to measure the exact frequencies of spin quantum transitions in the atomic nucleus and the frequencies of those, which are characteristic of the particular molecular moiety that's being looked at.

One of the beauties of NMR, is that if you take, for example a 600 or 900 mega Hertz or giga Hertz NMR spectrum now, the same analysis of it would be still be possible in a thousand or a million years because it's a physical statement about the chemical properties of that fluid that will never change. Even if NMR spectrometers were to get more sensitive, the basic structures of the data will be identical in a thousand or a million years.

In mass spectrometry, we're changing the technology all the time. The ion optics change, the ionization modes change and all of these things affect how the molecules or the fragments fly through the mass spectrometer and how they are detected.

One of the greatest challenges in mass spectrometry, for instance, is therefore time-proofing the data. You do not want to have to analyze a million samples now and then find that in five years the technology is out of date and you have to analyze them all again.

Some technologies such as NMR are in effect much more intrinsically time-proofed than mass spectrometry and a whole range of other technologies that are changing all the time. One of the ways forward is the informatic use for extracting principal features of spectroscopic data, which will be preserved irrespective of how those spectroscopic data were originally provided. Therefore, again, there's a different sort of informatic challenge that has to do with time-proofing, which I think is very interesting.

What other challenges still need to be overcome?

It is mainly about making analysis faster, cheaper and more reliable. I think the biggest challenge is to do with data sharing and the way that humans work or don't work together. If you pick up a copy of “Analytical Chemistry” any week, you would find half the papers describe a new, better method for measuring X, Y, or Z. It is always going to be a better, superior method, since otherwise, it would not get published in the journal. Analytical chemists who use current methods always think they can do better. That is the way they think, but when you are being clinical, you've got to draw the line somewhere and decide to harmonize and understand that you may have to wait some time until something significantly better comes along

An interesting problem going forward is going to be settling on analytical protocols that everybody can expect, despite the fact you know they are not perfect. It brings us back to the three Rs: ruggedness, reliability and reproducibility. When studying humans, populations or clinical situations using analytical chemistry, the three Rs are always going to be more important than absolute sensitivity and specificity (although these are always important), which is often what motivates most analytical chemists. The three Rs require very strict adherence to harmonized protocols that are sharable. I think that is going to be a challenge to people's egos.

What do you think the future holds for analytical science in precision medicine?

Precision medicine is about getting an optimized interventional strategy for a patient, based on a detailed knowledge of that patient's biology. That biology is reflected in the chemistry of the body at all the different organizational levels, whether it is genes, proteins, metabolites, or pollutants for that matter.

All the analytical chemistry technologies that inform on a patient or individual complexity will be ones that are important in the future. With respect to the future healthcare challenges, analytical chemistry is absolutely key; it is core to solving all of the emergent problems, as well as the ones we already face.

How do you hope the National Phenome Centre will contribute?

We have set up The National Phenome Centre to look at big populations and personalize healthcare challenges. We are in our fifth year of operation now and we are running lots of projects that are generating some tremendous findings.

We have now created an International Phenome Centre Network, where there is a series of laboratories built with core instrumentation that is either identical or extremely similar to ours. This means we can harmonize and exchange data, methodologies and therefore biology.

Imperial College was the first in the world, the National Phenome Center, and then the Medical Research Council funded the Birmingham Phenome Center about two years ago. We have transferred all our technologies and methods over and they have an NMR and a mass spectrometry core, which is effectively the same as ours, so we have completely interoperable data. In fact, we've just finished a huge trial, which involved Birmingham University as well.

There is also one in Singapore now, the Singapore Phenome Centre. The Australian Phenome Centre has just been funded by the second largest grant ever given in Australia and then there is a whole series lined up. However, we've already formed the International Phenome Centre Network, which was formally announced by Sally Davies, Chief Medical Officer of England in November last year.

This year, we will start our first joint project, which is going to be stratification of diabetes between the phenome centers that are up and running and using the same technology. We can carry out international harmonization of diabetes biology for the first time ever, so we are putting our money where our mouth is. To me, this is the most exciting thing that has come out of our work; the fact that there are now groups around the world that agree that harmonization is the way forward to get the best international biology hit and also to create massive data sets that are unprecedented in size and complexity to describe human biology.

This is another informatic challenge, but that's the future. There are a lot of dark emergent problems in human disease and we are going to go through some tough times over the next 30 or 40 years. However, we are starting to get our act together with things like the Phenome Centre Network and if it is not the network itself, it will be groupings like it that will rise to these great challenges facing humanity in the 21st century.

Where can readers find more information?

- Pittcon 2018: https://pittcon.org/

- National Phenome Centre: http://www.imperial.ac.uk/phenome-centre

- International Phenome Centre Network: http://phenomenetwork.org/

About Professor Jeremy K. Nicholson

- Professor of Biological Chemistry

- Head of the Department of Surgery, Cancer and Interventional Medicine

- Director of the MRC-NIHR National Phenome Centre

- Director of the Centre for Gut and Digestive Health (Institute of Global Health Innovation)

- Faculty of Medicine, Imperial College London

Professor Nicholson obtained his BSc from Liverpool University (1977) and his PhD from London University (1980) in Biochemistry working on the application of analytical electron microscopy and the applications of energy dispersive X-Ray microanalysis in molecular toxicology and inorganic biochemistry. After several academic appointments at London University (School of Pharmacy and Birkbeck College, London, 1981-1991) he was appointed Professor of Biological Chemistry (1992).

In 1998 he moved to Imperial College London as Professor and Head of Biological Chemistry and subsequently Head of the Department of Biomolecular Medicine (2006) and Head of the Department of Surgery, Cancer and Interventional Medicine in 2009 where he runs a series of research programs in stratified medicine, molecular phenotyping and molecular systems biology.

In 2012 Nicholson became the Director of world’s first National Phenome Centre specializing in large-scale molecular phenotyping and he also directs the Imperial Biomedical Research Centre Stratified medicine program and Clinical Phenome Centre. Nicholson is the author of over 700 peer-reviewed scientific papers and many other articles/patents on the development and application of novel spectroscopic and chemometric approaches to the investigation of metabolic systems failure, metabolome-wide association studies and pharmaocometabonomics.

Nicholson is a Fellow of the Royal Society of Chemistry, The Royal College of Pathologists, The British Toxicological Society, The Royal Society of Biology and is a consultant to several pharmaceutical/healthcare companies.

He is a founder director of Metabometrix (incorporated 2001), an Imperial College spin-off company specializing in molecular phenotyping, clinical diagnostics and toxicological screening. Nicholson’s research has been recognised by several awards including: The Royal Society of Chemistry (RSC) Silver (1992) and Gold (1997) Medals for Analytical Chemistry; the Chromatographic Society Jubilee Silver Medal (1994); the Pfizer Prize for Chemical and Medicinal Technology (2002); the RSC medal for Chemical Biology (2003); the RSC Interdisciplinary Prize (2008) the RSC Theophilus Redwood Lectureship (2008); the Pfizer Global Research Prize for Chemistry (2006); the NIH Stars in Cancer and Nutrition Distinguished Lecturer (2010), the Semelweiss-Budapest Prize for Biomedicine (2010), The Warren Lecturer, Vanderbilt University (2015).

He is a Thomson-Reuters ISI Highly cited researcher (2014 and 2015, Pharmacology and Toxicology, WoS H index = 108). Professor Nicholson was elected as a Fellow of the UK Academy of Medical Sciences in 2010, elected Lifetime Honorary Member of the US Society of Toxicology in 2013, and Honorary Lifetime Member of the International Metabolomics society in 2013.

He holds honorary professorships at 12 Universities (including The Mayo Clinic, USA, University of New South Wales, Chinese Academy of Sciences, Wuhan and Dalian, Tsinghua University, Beijing and Shanghai Jiao Tong University, Nanyang Technological University Singapore. In 2014 was Elected as an Albert Einstein Professor of the Chinese Academy of Sciences.

Sponsored Content Policy: News-Medical.net publishes articles and related content that may be derived from sources where we have existing commercial relationships, provided such content adds value to the core editorial ethos of News-Medical.Net which is to educate and inform site visitors interested in medical research, science, medical devices and treatments.

No hay comentarios:

Publicar un comentario